“Hooray for science!”

“Hooray for science!”

Today we’re talking about the Millennium Simulation. I know this is an old story, dating as it does to 2005, but it’s got three different angles that appeal to my inner science geek plus a little something extra. You could start by reading a summary, or the Guardian article about the project, to get an idea of what the project was about, and then move on to the press release from Max Planck Society’s Supercomputing Centre.

Essentially these scientists, in order to better understand how matter is interacts during the formation of galaxies, etc, set up the world’s largest ever N-body problem, designed to simulate a gigantic portion of the universe, and then ran it through billions of simulated years to see how the particles interacted to form the structure of the universe. Essentially they built a virtual baby universe in a can.

Essentially these scientists, in order to better understand how matter is interacts during the formation of galaxies, etc, set up the world’s largest ever N-body problem, designed to simulate a gigantic portion of the universe, and then ran it through billions of simulated years to see how the particles interacted to form the structure of the universe. Essentially they built a virtual baby universe in a can.

Well, that’s pretty cool, but where’s the specific appeal?

First there’s the appeal to the cosmological geek in me: they are running a simulation that traced the behavior of more than 10 billion “particles” during the early development of the universe. The region of space simulated was a cube with about 2 billion light years as its length, populated by about 20 million “galaxies”. So not only is there a “this is awesome, they’re trying to figure out how the universe happened” thing, but there’s also a ‘the scale here is insane” thing.

Second, there’s the appeal to the computer geek in me–the simulation kept the principal supercomputer (a cluster of 512 processors) at the Max Planck Society’s Supercomputing Centre in Garching, Germany occupied for more than a month. The simulations took a total of 28 days (~600 hours) of wall clock time, and thus consumed around 343000 hours worth of cpu-time. It generated 25 Terabytes of data, which was then actually analyzed. Can you imagine that? It’s insane. Generating 25Tb of data is easy. Generating 25Tb of useful data is ridiculously hard. Actually analyzing that much data once it’s been created? That’s crazy.

Also, consider that in 5-10 years we’ll likely all be able to do this at our desks. The computers on my desk now have about 15% of the storage space required, and about 8% of the computing power–according to Moore’s law it will be less than 10 years before my desktop machines can run this simulation in the same amount of time as it took the monster supercomputer. Yes, within 10 years I will probably be able to simulate the behavior of 8×1027 cubic light years of space through billions of years. That’s nuts.

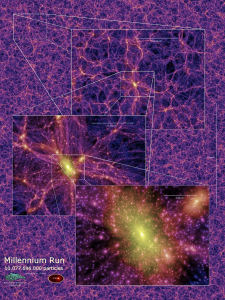

And finally there’s, the visualization angle. That analysis yields all kinds of useful information like explanations for the results from the Sloan Digital Sky Survey (which could be a whole other discussion on its own), etc. It also yields some very pretty pictures, and some interesting movies. It’s cool enough to have three dimensional information on the behavior of 10 billion particles over billions of years, but it’s even cooler to be able to render that information into a visualization that is more usefully apprehended by humans than 25Tb of numbers.

Oh, and the little something extra? Well, take a look at the images of the structure of the universe that the simulation generates, like say this one. Then look at this image. See the similarity? That image–it’s a bunch of neurons. Tell me you don’t love the idea of the entire universe as a gigantic brain. Tell me that you wouldn’t have loved to have that image in your head during some of those late night undergrad conversations about “deep” subjects.

Anyway, here’s some links to help you find out more, if your interest is piqued. First there’s the actual paper, which can be retrieved from the Plack Centre’s website. Then there’s the visualizations. Then, possibly coolest of all, is the publicly accessible database of the simulation data. That just blows my mind–that all of this data is there and searchable… Wow! You can also find a list of papers based on the simulation data at that site. There’s also a page on the simulation, with a list of resource links and links to articles, at The Virgo Consortium for Cosmological Supercomputer Simulations site.